Introduction

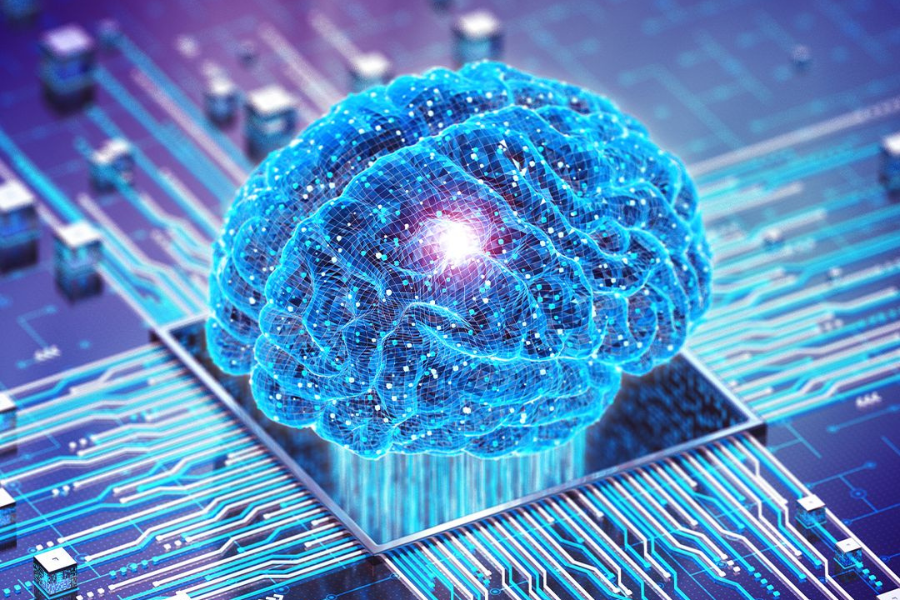

AI is moving from single-skill models (just text or just vision) to multimodal intelligence—systems that understand text, speech, images, video, and even gestures in one coherent brain. At the same time, agent platforms are making it easy to spin up task-oriented AIs—think copilots for operations, design, support, and field work—often built with low-code/no-code tools. Together, these two trends are redefining how products are designed and how teams execute work, from smart classrooms and hospitals to logistics hubs and city control rooms.

What is Multimodal AI?

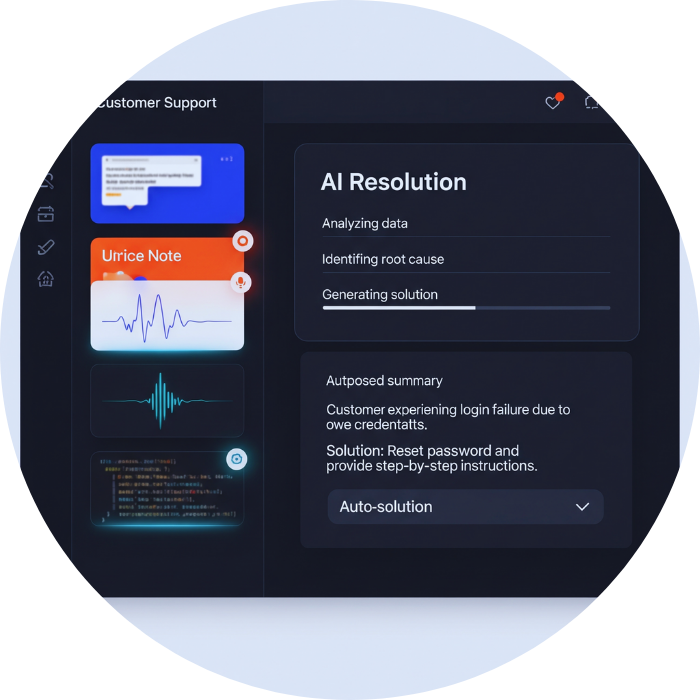

Multimodal AI fuses signals from multiple inputs to build a richer context and respond more accurately. Instead of asking a text bot to “fix the code” and then manually sharing a screenshot, you can show the bug, tell the agent what happened, and gesture to the broken UI element. The model aligns all of that, reasons about it, and acts.

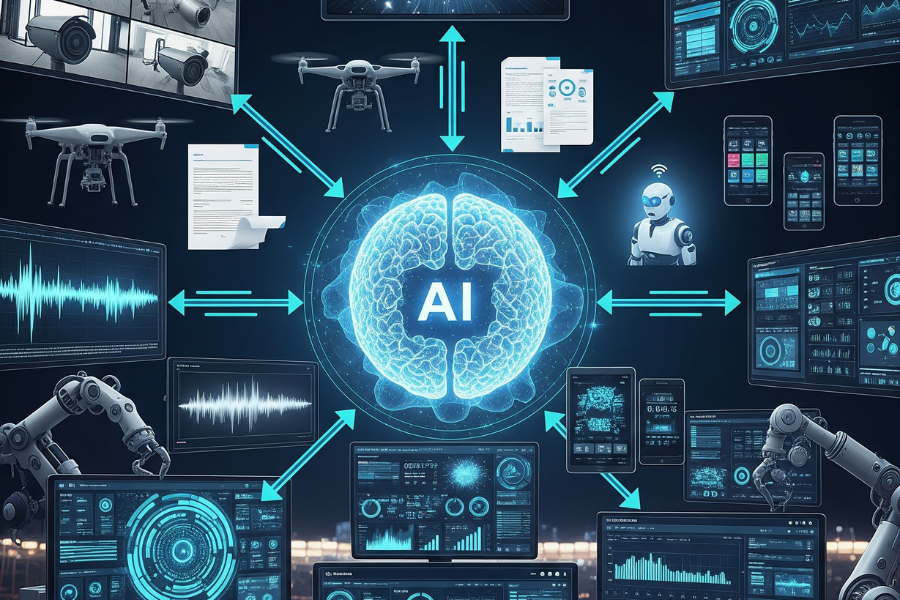

Core capabilities:

Vision + Language: Read charts, schematics, and forms; describe, summarize, or extract structured data.

Speech + Language: Real-time transcription, dialogue, and voice coaching with emotion/intent detection.

Gesture + Environment: Camera-based hand/pose tracking for touchless control in labs, clean rooms, and surgical theaters.

Action: Call tools, APIs, and robots—turning perception into outcomes.

What is Ying?

Ying is Zhipu AI’s multimodal creative suite that focuses on AI-generated video and digital content. Positioned as China’s answer to Runway or Pika Labs, Ying enables:

Text-to-video generation with cinematic quality.

Storyboarding tools for creators and educators.

Integration with ChatGLM to plan scripts, dialogues, and visuals in one pipeline.

Enterprise use cases from training videos to marketing campaigns.

High-Impact Use Cases

Education & Training – AI tutors powered by ChatGLM create adaptive lesson plans, while Ying generates illustrative video material.

Enterprise Productivity – Internal copilots draft reports, summarize meetings, and even generate explainer animations.

Media & Entertainment – Studios and influencers leverage Ying for rapid video prototyping, dubbing, and cross-language storytelling.

Scientific Research – ChatGLM aids literature reviews, hypothesis testing, and coding in fields like biotech and physics.

Agent Platforms: From Chat to Action

Building Blocks

ChatGLM Models: GLM-based architecture with efficient scaling.

Ying Video Engine: Multimodal diffusion models optimized for motion coherence.

Enterprise Tools: APIs, fine-tuning frameworks, and private deployment options.

Knowledge Integration: Links to Chinese academic and government datasets.

Why It Matters

National Strategy: Aligns with China’s AI 2030 goals.

Competitive Edge: Provides a domestic alternative to GPT-4, Gemini, and Claude.

Multimodal Power: Combines text reasoning and video generation in one ecosystem.

Adoption Curve: Already in pilots with universities, broadcasters, and state-owned enterprises.

Challenges

Regulatory Oversight: Must comply with China’s strict generative AI laws.

Global Reach: Limited adoption outside China due to language and policy barriers.

Competition: Faces rivals like Baidu’s Ernie Bot and Alibaba’s Qwen.

Conclusion

Zhipu AI’s ChatGLM & Ying represent a powerful dual-front push in AI reasoning and AI creativity. While ChatGLM strengthens productivity and enterprise adoption, Ying opens the door to next-gen content creation. Together, they cement Zhipu AI’s role as a leader in the Chinese generative AI landscape.

📖 Reference:

Zhipu AI official announcements and research blogs

-Futurla