Introduction:

Artificial Intelligence (AI) has shifted from narrow, task-specific systems to general-purpose intelligence. At the heart of this transformation are foundation models—large-scale AI systems trained on enormous datasets, capable of powering multiple applications. From OpenAI’s GPT series to Baidu’s ERNIE Bot and Zhipu AI’s ChatGLM, these models are becoming the core drivers of AI progress worldwide.

In 2025, foundation models represent the engine room of AI Core Trends. They are the flexible, adaptable “brains” that can be fine-tuned for healthcare, education, finance, manufacturing, and beyond—making them one of the most influential technologies of the decade.

What Are Foundation Models?

Unlike traditional AI, which was built for single tasks (like recognizing cats in photos), foundation models are trained on multi-domain, multi-modal datasets. They learn general patterns in language, vision, or multimodal signals, and then can be customized for downstream tasks.

Key Features:

Scale – Trained with billions of parameters, they can approach human-like reasoning.

Versatility – The same model can write essays, analyze X-rays, or generate software code.

Multimodality – Beyond text, many models now understand images, audio, video, and gestures.

Adaptability – Fine-tuning allows companies to build domain-specific solutions without starting from scratch.

Applications Driving Growth

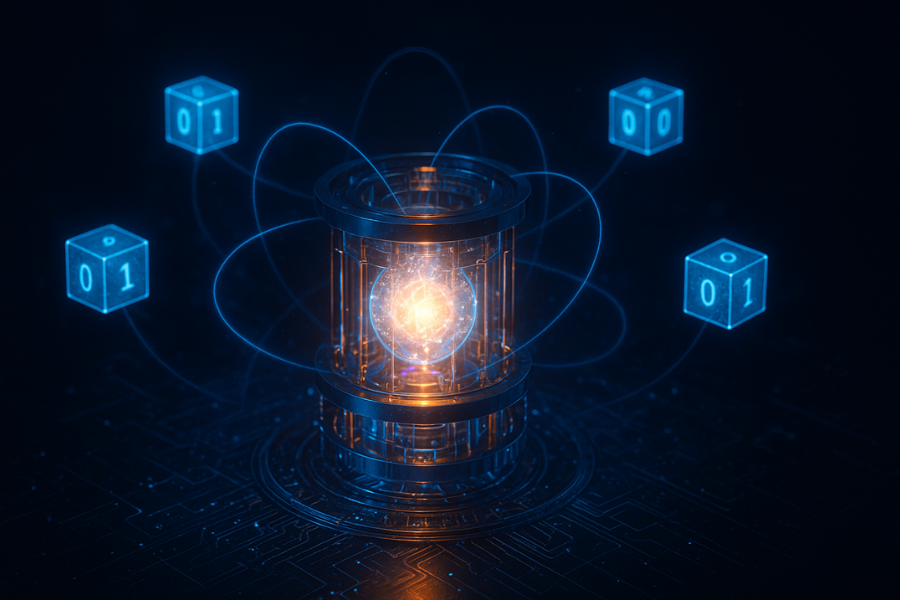

Healthcare

Foundation models assist in reading radiology scans, drafting patient notes, and even predicting disease progression. In China, AI-powered imaging tools are already helping hospitals in rural areas diagnose faster.Finance

Robo-advisors powered by large language models (LLMs) offer investment guidance, fraud detection, and risk scoring. Ant Group and Tencent are integrating foundation models into digital finance platforms.Manufacturing

Vision-language models help factories perform real-time quality assurance, defect detection, and predictive maintenance—key aspects of Industry 4.0.Education

Foundation models power personalized tutoring platforms, generating quizzes, explanations, and feedback based on each student’s strengths and weaknesses.

Challenges Ahead

Bias and Fairness

Large datasets can embed cultural or social biases, leading to discriminatory outputs.Energy Consumption

Training models with hundreds of billions of parameters requires enormous computational resources and electricity, raising sustainability concerns.Data Privacy

Since these models often process sensitive information, robust safeguards are essential.Regulation

Governments worldwide are drafting AI governance frameworks, but consensus on ethical use, accountability, and international standards is still evolving.

The Road Ahead

Foundation models are entering a phase of specialization and efficiency. Instead of building ever-larger models, companies are focusing on domain-specific versions (like healthcare or legal AI) and smaller, optimized models that can run efficiently on local devices.

The next five years may also see multimodal foundation models becoming the norm, blending voice, text, and vision into seamless, human-like interactions.

Conclusion

Foundation models are the core DNA of modern AI, setting the stage for breakthroughs across industries. Their adaptability and scale mean they are not just powering apps—they are reshaping economies. While challenges of ethics, cost, and governance remain, foundation models are positioned to be the driving force of AI Core Trends in 2025 and beyond.

-Futurla