Introduction: AI is Smart — But Can We Understand It?

From recommending what to watch on Netflix to diagnosing medical conditions, AI now influences decisions that affect lives. But there’s one major issue: we don’t always know how or why these AI systems make their decisions.

Many advanced models — especially deep learning systems — act like “black boxes.” They give outputs, but can’t explain themselves clearly. This raises a huge question: Can we trust an AI if we can’t understand it?

Enter Explainable AI (XAI) and Ethical AI — two movements aiming to make AI transparent, fair, and accountable. In 2025 and beyond, XAI will become a core part of regulations, industry best practices, and public trust in intelligent systems.

What is Explainable AI (XAI)?

Explainable AI refers to methods and techniques that make the decision-making process of AI systems understandable to humans. It’s about showing “why” the AI made a decision — not just “what” it decided.

For example:

A credit-scoring AI denies someone a loan — why?

A medical AI recommends surgery — based on what?

A self-driving car swerves — what triggered the action?

With XAI, users, developers, and regulators can gain insights into the logic behind these choices.

Real-Life Scenarios Where XAI Matters

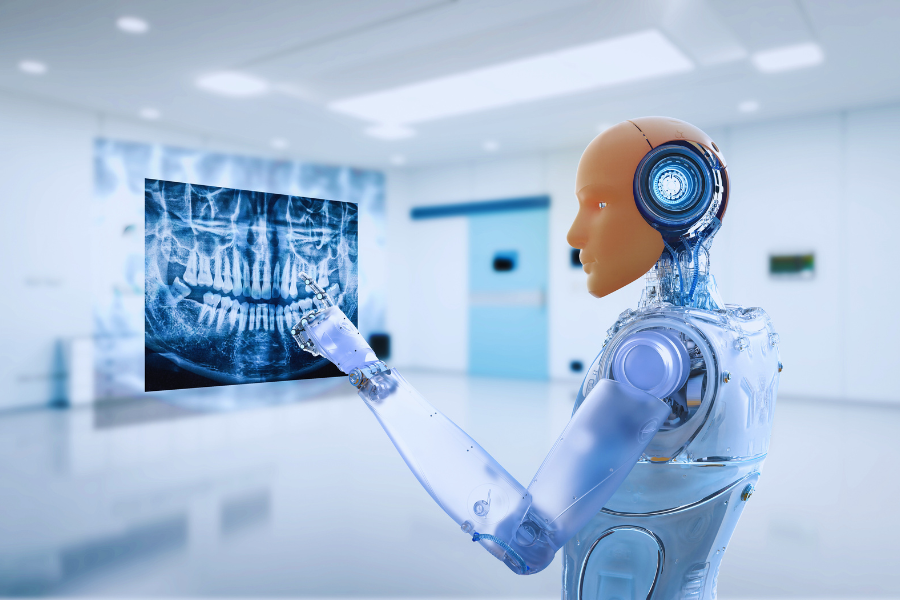

Healthcare

AI can now detect diseases like cancer or heart conditions from scans. But without explainability, doctors hesitate to trust it. XAI helps medical professionals see the features (e.g., tumor edges, texture, blood flow) the AI used to make its diagnosis.Finance & Credit Scoring

Automated systems decide who gets loans or insurance. XAI ensures customers aren’t unfairly rejected based on biased data (e.g., ZIP code, ethnicity) — and allows companies to remain compliant with fair lending laws.Recruitment & Hiring

AI tools are used to filter resumes and rank candidates. If the system is biased toward gender or name origin, XAI helps detect and correct that before harm is done.Autonomous Vehicles

In accidents or near-misses, XAI enables analysis of what decisions the AI made and why — essential for legal accountability.

What is Ethical AI?

Ethical AI goes beyond explainability — it’s about building AI systems that are aligned with human values such as fairness, safety, non-discrimination, accountability, and privacy.

Key principles include:

Fairness: No discrimination based on race, gender, or location

Transparency: Clear documentation of how models are trained and used

Privacy: Respect for data and individuals’ rights

Accountability: Systems should be auditable and traceable

Governments and regulators worldwide are starting to demand ethical standards. The EU AI Act, U.S. AI Bill of Rights, and India’s AI Ethics Framework all emphasize explainability and fairness.

How XAI Works: Technical Methods

Model-Agnostic Tools

LIME (Local Interpretable Model-agnostic Explanations): Shows how input features affect predictions

SHAP (SHapley Additive exPlanations): Visualizes each feature’s contribution to a decision

Transparent Models

Use simpler models like decision trees, linear models, or rule-based systems when appropriate

Attention & Heatmaps

In image or NLP systems, visual attention layers highlight areas or words that influenced the outcome

Counterfactual Explanations

Tells users: “If X had been different, the AI would have predicted Y instead”

Black Box vs. Glass Box

| Feature | Black Box AI | Explainable (Glass Box) AI |

|---|---|---|

| Understandability | Low | High |

| Trust Factor | Low | High |

| Regulation Ready | Risky | Compliant |

| Model Type | Often Deep Learning | Often Transparent Models |

Challenges in XAI & Ethical AI

Trade-off Between Accuracy and Explainability

More complex models often perform better — but are harder to explain.Over-simplification Risk

Explanations must not mislead. A simplified explanation can hide actual risks.Cultural and Legal Differences

What is “ethical” in one country might not be in another. Global companies must navigate this carefully.Bias in Training Data

If training data is biased, the AI will be too — even if it’s explainable.

The Future of Explainable & Ethical AI

AI Auditors & Ethics Boards will become standard in tech companies

Human-in-the-loop systems will ensure oversight on critical AI decisions

Legislation will require models to provide “right to explanation” for users

Explainability-by-design will become a core part of AI development workflows

Conclusion

AI’s impact is growing every day—but so are the questions around trust, bias, and responsibility. Explainable and ethical AI isn’t just a technical feature—it’s a moral and social necessity.

As AI moves deeper into health, law, finance, and governance, it must be held to higher standards of transparency, fairness, and accountability.

Because in the end, if we want to trust AI with big decisions — it must earn that trust.

-Futurla