Introduction: The Environmental Cost of Intelligence

Artificial Intelligence is often praised for its brilliance, but behind the scenes, it has a massive carbon footprint. Training large language models like GPT, BERT, and Gemini consumes millions of kilowatt-hours of electricity and produces more CO₂ than five cars over their lifetime.

In 2025, as climate concerns intensify and regulations tighten, AI must evolve — not just to be smarter, but greener.

Welcome to the world of Green AI: the growing movement to make artificial intelligence sustainable, efficient, and environmentally responsible.

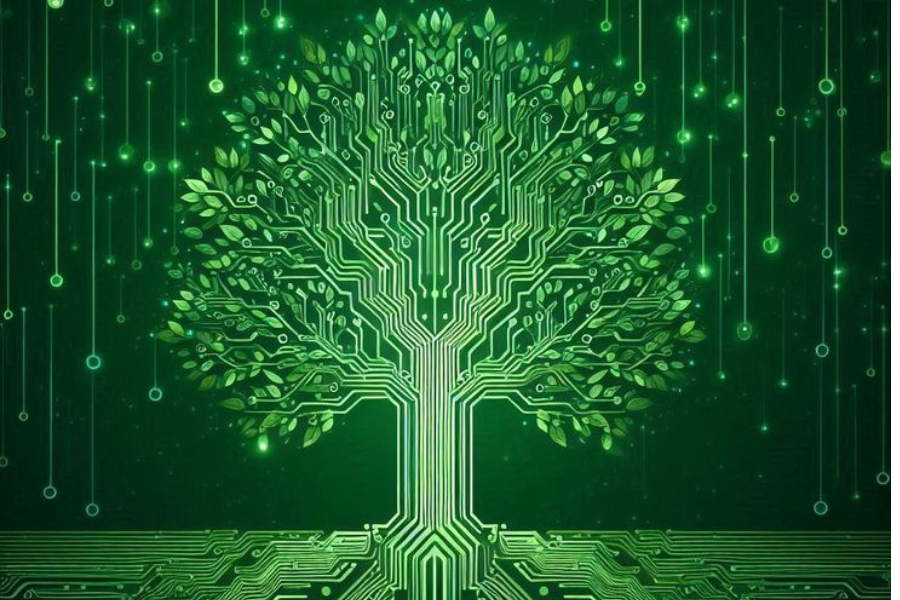

What Is Green AI?

Green AI refers to the design, development, and deployment of AI systems with environmental sustainability as a core priority. It focuses on:

Reducing energy and resource consumption in training and inference

Using AI to optimize environmental systems (e.g., smart grids, agriculture, weather prediction)

Promoting transparency in carbon usage (a metric called “compute cost”)

Coined by researchers at Allen Institute for AI, the term “Green AI” now reflects a global shift in how we evaluate the cost-benefit of intelligence.

The Problem: AI’s Hidden Carbon Cost

Training large AI models is extremely resource-intensive. Consider:

- GPT-3 required ~1,287 MWh of electricity to train — the same as powering 120 U.S. homes for a year

- CO₂ emissions from a single large model can exceed 280 tons

- Data centers powering AI models already account for 2% of global electricity consumption

And it’s not just training. Running AI models (inference) across millions of devices daily contributes further to environmental strain.

Key Principles of Green AI

1. Efficiency-first development

Focus on algorithms that deliver performance with lower computation, not just accuracy at all costs.

2. Transparency in carbon reporting

Encourage researchers to publish compute cost (FLOPs, energy use, CO₂) alongside accuracy metrics.

3. Hardware optimization

Leverage energy-efficient chips like Google’s TPU, Apple’s Neural Engine, or edge devices that run AI without data centers.

4. Model compression & pruning

Smaller, smarter models (like DistilBERT, TinyML, or LoRA-based LLMs) provide high performance at a fraction of the cost.

How AI Can Help the Planet

Green AI isn’t just about reducing its own footprint — it can also enable sustainability across sectors:

1. Energy Optimization

AI is used to balance loads in smart grids, predict demand, and reduce waste. Google’s DeepMind cut cooling energy at its data centers by 40% using reinforcement learning.

2. Agriculture & Water Management

AI models optimize crop yield, monitor soil health, and reduce water usage via precision irrigation systems.

3. Climate Prediction

Projects like ClimateAi use deep learning to improve weather forecasts, helping farmers, disaster teams, and city planners prepare for extreme events.

4. Wildlife Protection

AI-powered drones and camera traps identify poachers, monitor endangered species, and track illegal deforestation in real-time.

How It Works: Behind the Scenes

Multimodal AI involves three key components:

-

Input Encoders

Each type of input—text, images, audio—is converted into a numerical representation or embedding using modality-specific encoders (like CNNs for images or Transformers for text). -

Fusion Layer

The encoded data is then merged in a “fusion” step. Techniques include early fusion (combine before processing), late fusion (combine outputs), or joint embedding spaces. -

Unified Reasoning & Output

The fused representation is passed through neural reasoning layers to generate predictions, summaries, or actions in any modality (text response, image generation, etc.).

Global Impact & Industry Shifts

Big Tech Steps In:

Google Cloud & Microsoft Azure now offer carbon-aware compute options

Meta is building carbon-neutral AI training facilities

HuggingFace now shows CO₂ emissions estimates for open-source models

Open Source Efficiency:

Open models like Mistral, Phi-3, and LLaMA 3 are outperforming larger models with far lower environmental cost.

Green AI Startups:

Cohere for AI, Greenwave, and Anthropic are pioneering low-impact LLM development.

Trends to Watch

| Trend | Description |

|---|---|

Green AI Metrics Green AI Metrics | Accuracy + Carbon use + FLOPs becoming the new benchmark trio |

Smaller foundation models Smaller foundation models | LoRA, TinyML, and modular architectures gaining traction |

Bio-inspired AI Bio-inspired AI | Energy-efficient algorithms inspired by nature and the brain |

AI + Renewable Energy AI + Renewable Energy | AI helping solar/wind optimization and battery storage |

Conclusion

AI is one of the most powerful tools humanity has ever created — but with great power comes a greater environmental responsibility.

Green AI doesn’t mean sacrificing performance. It means building smarter, cleaner, and more conscious intelligence that benefits both people and the planet.

In the years ahead, sustainable AI won’t be optional — it will be a requirement. Whether you’re a developer, researcher, or enterprise leader, now is the time to ask:

“Is my AI helping the world — or hurting it?”

-Futurla